Blog / What is Sample Rate, Bit Rate, Bit Depth, and Buffer Size? (In Plain English)

Some of the most often-confused terms in audio: sample rate, bit rate, bit depth, buffer size. As a musician, you don’t need to know every nerdy detail about each term, but you should at least know the difference between them all. I’ll describe all of these in plain English within the context of AUDIO specifically.

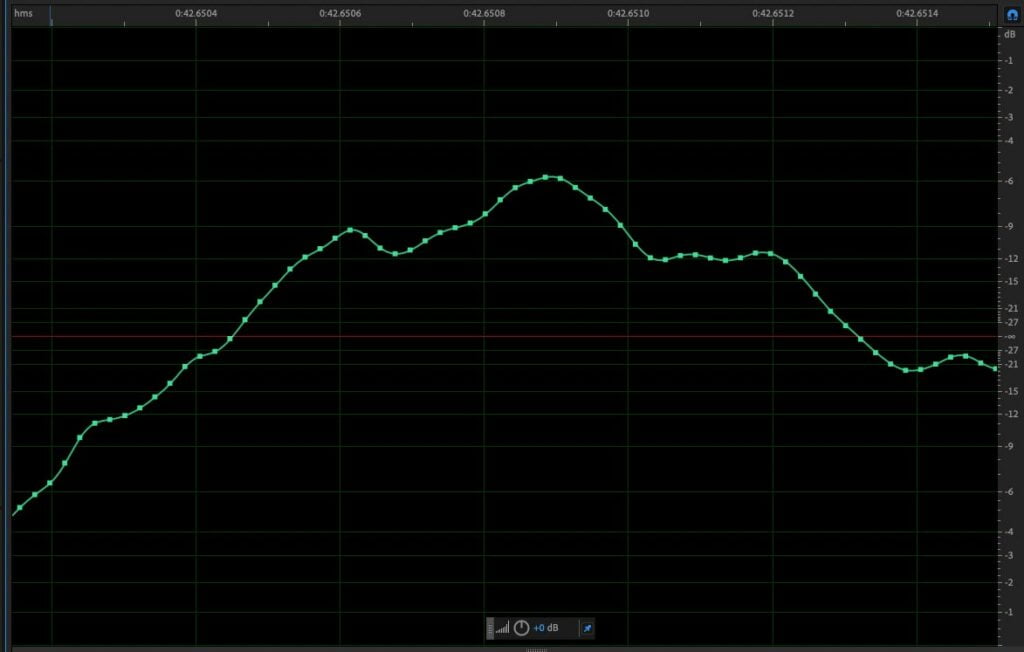

Digital audio is made by capturing sound at many different points in time. As you can see in the image above, there are a bunch of dots evenly spaced over time. Each dot represents when a snapshot of the audio is taken. The more times per second you take a sample/snapshot, the higher frequencies of sound you can capture.

Think of sample rate as being similar to frame rate in video (it’s not a perfect analogy, but should help you understand the idea). Just like how every frame of video is a photo of a moment in time, an audio sample is measuring sound waves at a moment in time.

CDs, streaming, and most other consumer formats for music use a sample rate of 44100 samples every second aka 44.1hkz. A sample rate of 44100 lets us represent all the sounds we can hear (supporting sounds up to 22000hz). However, we often record and mix at higher sample rates so we have more information to manipulate and filters used for processing don’t start creeping down into a range we can hear. I recommend creating at 48khz so you can capture sound up to 24000hz, but exporting at 44.1khz.

Bit depth refers to how many levels of amplitude a file stores (amplitude basically means the volume in the signal world). Each sample has an amplitude value associated with it. The more bits you can store in a file, the more dynamic range the file has. Too little dynamic range and your quiet sounds can’t be saved and will just sound like noise when played back.

Most CD, streaming services, etc use 16 bits of resolution. This is more than enough resolution for us to listen to, as you can have 96.33db of dynamic range. BUT, just like sample rate, 16 bit isn’t often enough when creating music. If you record something too quietly at 16 bits and then boost the volume in the mix (or use an aggressive compressor), you might inadvertently bring up the noise that’s 96db down, up into a range where it’s more audible. (Note: this noise is in addition to the noise that might be added from your microphone, interface, plugins, etc).

To combat this, it’s best to record at 24 bits, but dither and distribute at 16 bits.

This is probably the most incorrectly-used term on the list since it sounds like both “sample rate” and “bit depth.”

The bit rate of audio refers to the amount of data in a file for a second of audio.

You may say: “hey that sounds a lot like sample rate!” Almost! Sample rate refers to how we capture and reproduce something that is analog in the digital world, but BIT RATE refers to the storage of the resulting files and how large those files are.

In fact, most of the time you hear “bit rate” discussed, it will be in the context of lossy formats like MP3, AAC, and OGG and how much they are trying to compress the resulting file’s size.

This is done by applying algorithms to the audio so that “unnecessary” sounds are removed or approximated, and thus can be packaged in a smaller file. You’ll still have a SAMPLE rate of 44.1khz or 48khz, but the music will be altered so the gist of the audio is there, but can be packaged in a smaller file. How good it sounds really depends on the format, encoder, etc as they all have different things they aim to manipulate for the sake of a smaller file.

This is the odd one out on this list, but I had to include it because so many people call it “sample rate” on accident (which is understandable because you’re telling the computer how many samples to process at a time).

Buffer size is how large a chunk of audio your computer/DAW processes at a time (the number of samples). Bigger chunks require more time to process, smaller chunks are processed quickly. You’ll see this as an option in your DAW’s preferences (32, 64, 128, 256, 512, etc).

Your computer can work through 32 samples of data a lot faster than it can work through 512 or 1024 samples of data, hence why larger buffer sizes lead to more latency.